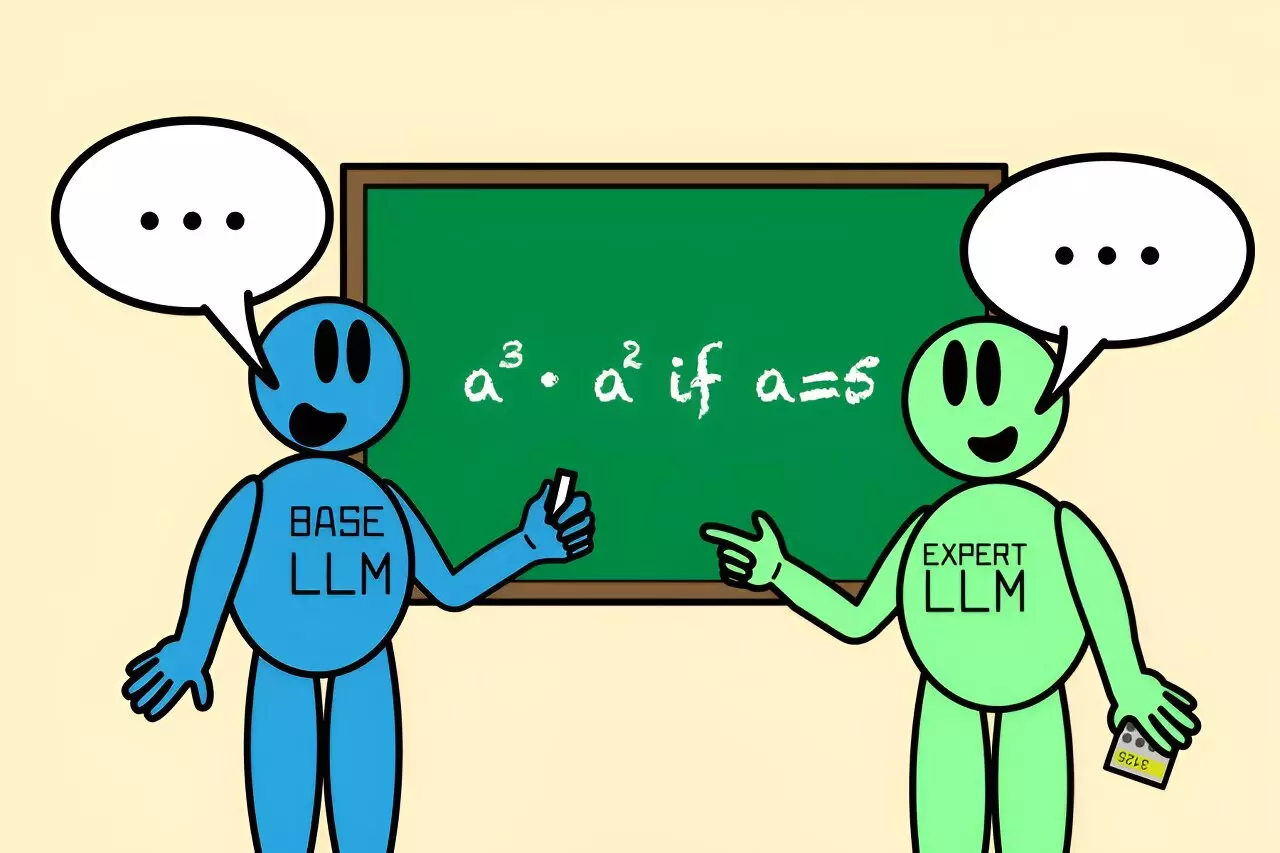

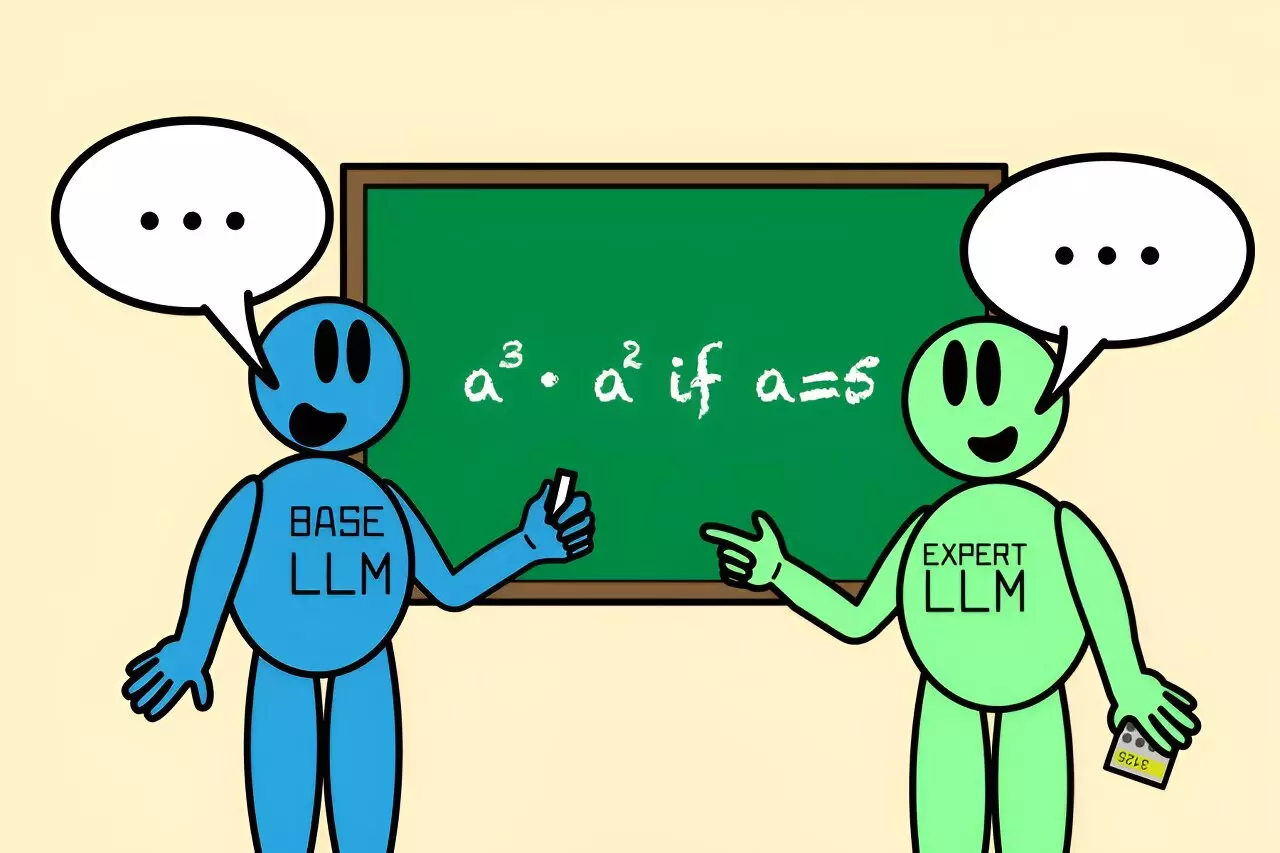

Enhancing Collaboration Among Language Models: The Co-LLM Innovation

In the realm of artificial intelligence, particularly within large language models (LLMs), the quest for accuracy has prompted researchers to explore innovative collaboration methods. A recent advancement from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) introduces an algorithm known as Co-LLM, which ingeniously enables a general-purpose LLM to work alongside specialized models. By mimicking a collaborative process akin to how humans seek expertise, Co-LLM aims to enhance the performance of language models in a range of applications, from medical inquiries to complex mathematical solutions.

Imagine a scenario where you are faced with a question during a conversation, only to realize that your knowledge is incomplete. In such cases, it is not uncommon to reach out to a more knowledgeable friend or colleague for insight. Researchers at MIT have drawn upon this human tendency to enhance LLM functionality. Instead of relying solely on predefined rules or vast volumes of training data to indicate when an LLM should seek assistance, Co-LLM builds a framework that allows the model to recognize its limitations and collaborate with a specialized counterpart. This dynamic partnership enables the general-purpose model to craft initial responses while allowing the expert model to fill in specific gaps, thereby leading to more accurate and contextually relevant answers.